Open and Accurate Air Quality Monitors

We design professional, accurate and long-lasting air quality monitors that are open-source and open-hardware so that you have full control on how you want to use the monitor.

Learn MoreThis article is now outdated. Please find our latest update here.

Over the past few weeks, many customers have contacted us with an issue where the PM sensors on their indoor monitors show relatively low concentrations of PM1.0, PM2.5, and PM10 compared to other monitors. At first, we thought this was an isolated issue, but we see it more frequently now and have started investigating this issue.

If you’re reading this post, there’s a good chance our customer support team referred you to it, as you’ve already encountered the issue yourself. We want to sincerely apologise for your experience with our devices so far. Please know that we are doing our best to address this issue as quickly as possible. Since transparency is one of our core values, we want to share our current findings. We will update this blog post as we learn more about it.

What We Know So Far

Technically speaking, the issue is within the sensor manufacturer's specified accuracy of ±10% at 100~500µg/m3 and ±10µg/m3 at 0~100µg/m3. However, we’ve seen much better accuracy from these sensors in the past, and we want to do everything possible to improve the accuracy of these monitors.

Preliminary Results

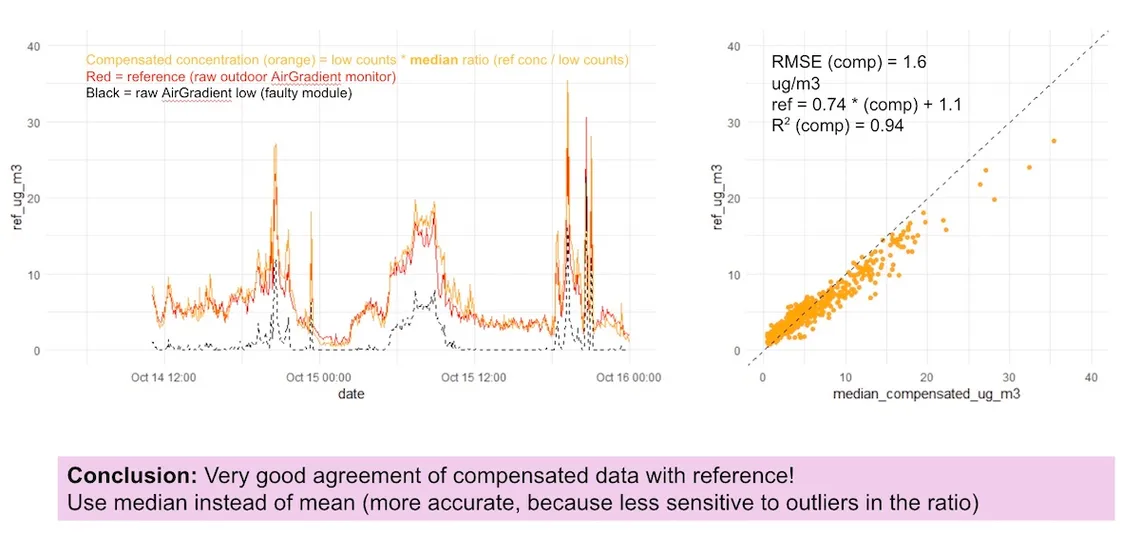

With our very limited datasets, we found that the PM0.3 count (which is still shown on these sensors, albeit at far lower counts than usual) can have a correction factor applied to obtain very similar results to a working PMS5003. Anika and Siriel from our science team have worked hard to get these correction factors worked out, and we believe we are now on the right track.

While most people are acquainted with PM2.5 concentration, we needed to use the PM0.3 count for this correction factor. The issue is that many of the problem monitors show a particle concentration of 0ug/m3. With such a low concentration, no matter what correction factor is applied, the end result will still be 0. On the other hand, we still see PM0.3 counts, albeit very low ones, with these monitors.

With this correction factor applied, we see an R² value of 0.94, which shows a very high correlation between the working and corrected monitors. In fact, the R² is so high that it’s very similar to what we see on our monitors that are working as intended. As such, we believe this correction factor works very well.

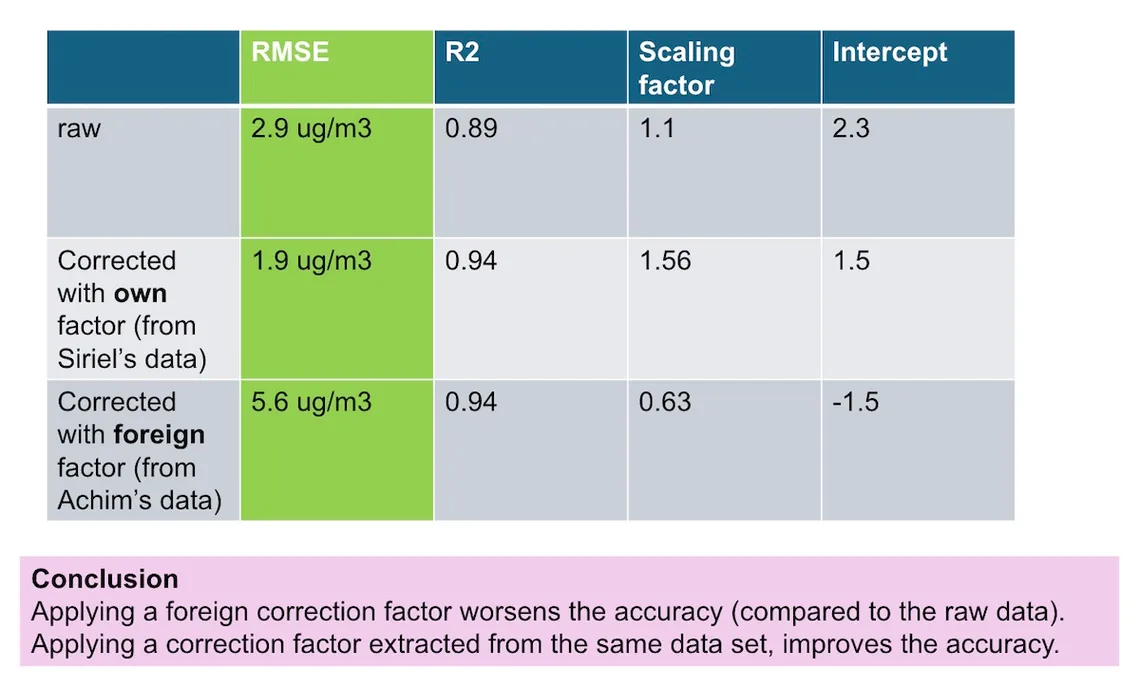

This concept works across different monitors, but the correction factor is inconsistent. In a second case (shown below), Siriel has two monitors colocated - a (working as intended) Open Air monitor and a (miscalibrated) ONE. When we apply the correction factor from the previous data set, we get a greater RMSE (Root Mean Squared Error) than we do with the raw data. However, if we apply a correction factor extracted from the same dataset, we get a significantly improved RMSE. We believe the correction factors may be based on the shipment the sensor came from.

Again, please note that these findings are preliminary, and we currently have very limited datasets to work with. If you happen to have an AirGradient ONE with a problem sensor and either an Open Air or a working AirGradient ONE and want to help us identify the issue and improve our correction factors, please feel free to colocate them and reach out to our customer support team with the monitor’s serial numbers so we can investigate the issue further. Any help we can get would be greatly appreciated!

While this isn’t the way we wanted to test this, we also think this shows our potential for further calibrating sensors in the future. In combination with the reference-grade monitor that we now have (more on that below), we may even be able to create more accurate correction formulas than they have applied from the factory.

Why Did We Not Notice This Issue?

Many customers purchase pre-assembled monitors from us because they trust us and our lab testing, which we perform before sending the monitors out. I’ve already had a few customers ask how the monitor passed our testing with this issue present, and we believe it’s important to address this before discussing the steps we will take to ensure this doesn’t happen again.

Within our test chamber, we have purification systems running, which means the air is almost always very clean and, therefore, has very low particulate matter concentrations. To test the monitors, we create spikes in PM using methods such as burning incense. This causes an extreme but short spike in PM, and we compare the results of each monitor to our expected result. This works well to show the monitors' accuracy at higher concentrations, but it does little to uncover issues that occur at low particulate matter levels such as this one.

So as a result, most of the duration the monitors were tested in the test chamber were at zero PM concentration and these periods could not distinguish between the monitors that showed lower than usual concentrations.

What Are We Doing to Fix the Issue?

Initially, we believed these sensors to be faulty. However, Achim, along with our team of scientists, has noticed that the PM0.3 values from these sensors correlate well with the PM2.5 concentration from working sensors. As such, we no longer believe that these sensors are faulty but that they are miscalibrated.

While we currently have limited datasets to work with, our team has found that we can use PM0.3 count to calibrate the sensor modules correctly. While we don’t yet know how many algorithms we will need and whether it’s on a per-sensor basis, a per-batch basis or otherwise, we are confident we can solve the issue through these compensations.

We have also begun comparing sensors from different batches so we can identify different trends across the different batches and create correction algorithms for these going forward. We believe that going forward this may even allow us to improve the accuracy of our monitors.

What Are We Doing to Prevent an Issue Like This From Occurring Again?

While we are doing our best to fix this issue for customers who are already impacted, it’s equally as important to ensure the issue doesn’t happen again. We’ve already been working on improving our testing procedures, and, unfortunately, this issue happened when it did, as it’s something that we could’ve caught had it happened in a few months. This is due to two key changes:

In what we believe is a first (or near first) for an air quality monitor company, we’ve recently purchased a Palas Fidas reference instrument, which is now set up in our lab. This monitor will allow us to carefully calibrate and test our monitors against a reference instrument that we know is accurate. Due to the high costs of such monitors, most companies only get the opportunity to compare their devices to reference-grade monitors through studies and other collaborations.

However, as we will now have a reference-grade instrument set up in our lab, we will have full access to the device and be able to use it whenever we want. This means we will have an accurate baseline to compare our monitors to, and we can much more easily identify errors, miscalibrated sensors, and faulty sensors. We already have the device, and we are finalising setting it up at the moment.

Until recently, we have not been able to hold a consistent PM concentration above very low concentrations. This meant we were unable to identify this issue, as it is most noticeable at low concentrations. However, over the past few months, our team has been working on creating a chamber that is able to hold a consistent PM concentration at varying levels. This means we will no longer need to rely on our method of temporarily ‘spiking’ PM2.5 levels.

Combined with the reference-grade monitor we now have, we can conduct far more in-depth testing of our monitors and sensors with greater PM concentrations. Since we are able to maintain these concentrations more consistently, we can also test the longer-term performance of sensors, which will highlight any faults or miscalibrations better than our current tests.

It’s important to note that monitors that are currently in transit may still have this issue. Furthermore, DIY kits do not go through this testing.

We will continuously update this post.

Hi everyone. Thank you for bearing with us over the past week. We’ve continued working hard to address this issue and have some news to share.

Firstly, we have moved a group of these sensors to our testing facility and are now comparing these alongside other shipments of sensors so we can identify differences between each group. With our Palas Fidas reference-grade instrument now set up, we are able to compare these sensors to a known-accurate baseline, which has allowed us better to understand the differences in calibration between different sensor batches. It’s also helped us expedite the process of creating a correction for the sensors that are currently causing issues.

Below, you can see an image of Nick and Guide in our testing facility in Chiang Mai calibrating the Palas Fidas. The wall behind them shows monitors we are currently testing

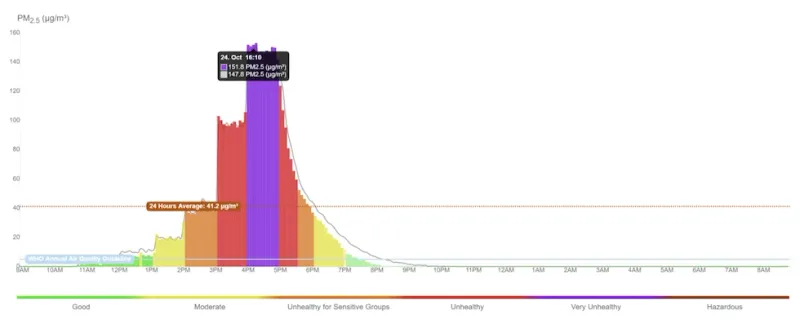

We’ve also been testing our new testing chamber, and our new tests for fully-assembled monitors are far more thorough. We can now maintain consistent levels of PM2.5, allowing us to test monitors at very low concentrations all the way up to 150µg/m3. This will allow us to identify issues like this one in the future before the monitors are shipped. The image below shows data from a monitor that has been through this procedure.

As you can see, we first increase the particle concentration in the room to 10µg/m3, followed by 20µg/m3, 40µg/m3, 100µg/m3, and 150µg/m3, leaving the concentration at each level for one hour. This allows us to easily identify issues that only occur at certain concentrations.

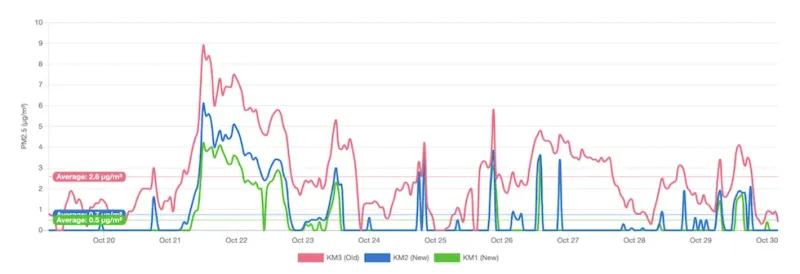

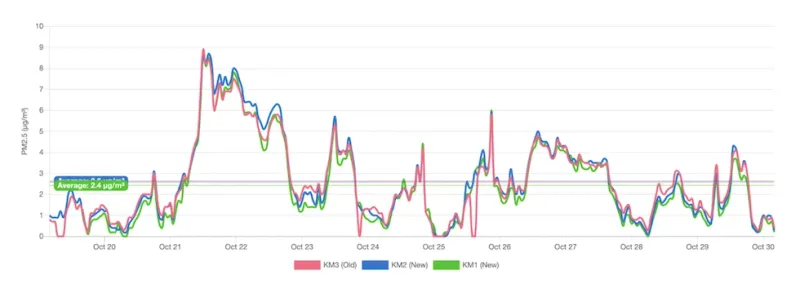

Being able to compare different sensors to each other, we’ve discovered that the Plantower sensors have different performances based on their batch. As you can see in the graph below, we tested five sensors from the problem batch (2023121) to five sensors from another batch and the difference is instantly obvious - however, it’s also consistent between batches. Shorter term, this will allow us to apply corrections on a per-batch basis. Longer term, though, is even more exciting as we want to begin implementing corrections, perhaps even on a per-sensor basis.

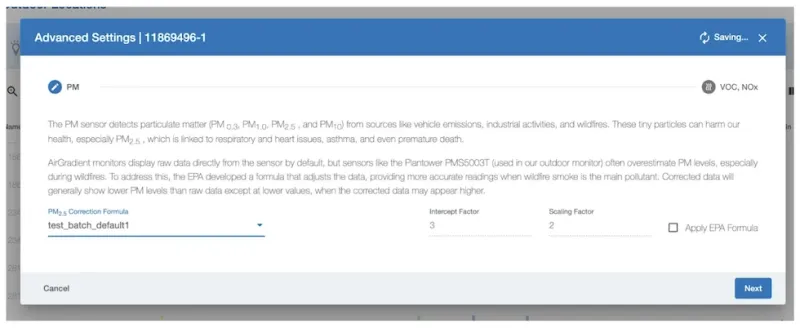

While we are constantly looking for more data, we believe we now have enough data to confirm our corrections work, and we’re now working on implementing the corrections on the dashboard. While there are still placeholders, you can see our test interface below. We will provide intercept and scaling factors by default for these sensors and future batches. However, for anyone wanting to change these values further, there will be a custom setting giving the user full control of their PMS5003 corrections.

We still need to finalise this addition to the dashboard, but we believe this functionality will be released early next week. We appreciate your patience. Please keep an eye on the dashboard for this new feature under the ‘Advanced Settings’ tab.

While this isn’t how we had intended to implement the feature, we are excited to release this functionality and update our testing facility. These changes give us a lot of potential to further improve the accuracy of our devices in the future.

Before concluding this update, I would also like to thank the community members who have helped us with testing. Your help has allowed us to roll out this fix as quickly as we did, and we appreciate your support!

Our team has been working hard to get to the bottom of this issue, and our science team has now come to some further conclusions. Namely, the PMS5003 sensor errors are consistent across batches, and the formulas have worked very well so far in our testing.

These are both very good pieces of news, as this confirmation of our earlier beliefs means that we can provide batch-wide corrections and apply them by identifying which batch each user’s sensor belongs to. If you’re curious about the exact formula Anika has developed, you can find it in the section below.

Apply scaling factor and intercept

PM2.5_calibrated_low <- scaling factor * PM0.3_count + intercept

Use the calibrated data only for PM2.5 < 31 ug/m3

IF (PM2.5_calibrated_low < 31)

THEN (PM2.5_calibrated = PM2.5_calibrated_low)

ELSE (PM2.5_calibrated = PM2.5_ugm3)

Make sure that no negative values are produced

IF (PM2.5_calibrated < 0)

THEN (PM2.5_calibrated = 0)

The scaling factor and intercept vary by batch, and this is why we must apply the same formula, but with different values, to each batch. So far, we’ve tested four batches against ‘reference’ Plantower sensors that are working correctly, and you can find both our scaling factors and intercepts below.

| Batch | Scaling factor | Intercept |

|---|---|---|

| 20231218 | 0.03701 | -0.66 |

| 20240104 | 0.0319 | -1.31 |

| 20231030 | 0.03094 | -1.17 |

| 20231008 | Doesn’t need correction |

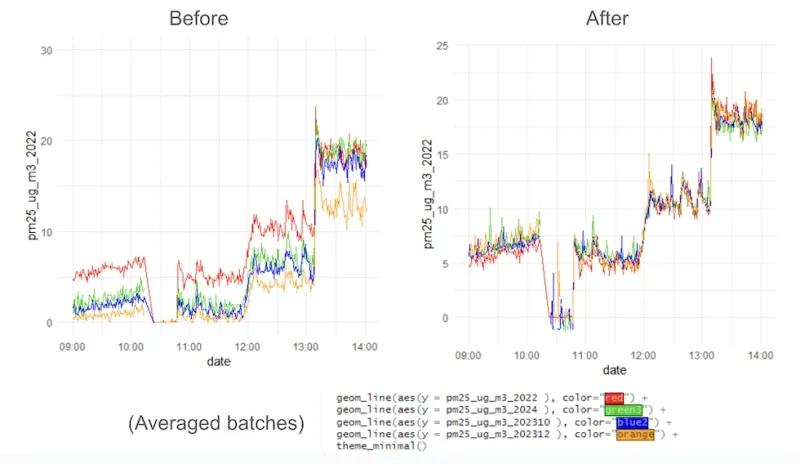

To further test that this formula and values work correctly, we’ve been testing some monitors from different batches. Below, you can see how the application of above algorithms aligns the different batches. As you can see, the formula corrects the errors very well and these results show precision similar to what we would expect between two sensors working as intended.

We have been confident that these fixes would work since the beginning, but we didn’t want to rush out anything that might not be thoroughly tested and known to work. We’ve now tested different batches of sensors and applied the formula multiple times to good success. With these findings, we will be pushing these fixes to the dashboard soon.

With further testing, Anika has uncovered an issue with our previous batch corrections at very low concentrations. This was caused by the intercept that we applied with the previous formula, and the formulas have now been adjusted, so the intercept is 0 for them all.

Following testing at very low concentrations (<5ug/m3), we’ve found these new batch corrections to perform better at low concentrations. There is a slight overestimation of PM2.5 at higher concentrations, but this is very minor and far below the manufacturer’s stated error. We will not apply the formula at values < 30ug/m3 (after correction) as it is not needed.

Below are the new scaling factors that have needed to be adjusted with the intercept being forced to 0.

| Batch | Scaling factor | Intercept |

|---|---|---|

| 20231218 | 0.03525 | 0 |

| 20240104 | 0.02896 | 0 |

| 20231030 | 0.02838 | 0 |

| 20231008 | Doesn’t need correction |

Below you can see the differences these updated corrections have had. The red line represents a working-as-inteded monitor, while the other two lines are monitors with sensors from the 20231218 and 20231030 batch.

Before:

After:

As you can see, after these correction formulae changes were applied, the data fits much more closely with the reference data.

We have released a dashboard update for all users and you can now select the correction factor if you have an affected sensor.

Here are the instructions on how to identify and fix this issue.

We design professional, accurate and long-lasting air quality monitors that are open-source and open-hardware so that you have full control on how you want to use the monitor.

Learn MoreCurious about upcoming webinars, company updates, and the latest air quality trends? Sign up for our weekly newsletter and get the inside scoop delivered straight to your inbox.

Join our Newsletter